Find out about the process that went into creating A Generated Family of Man, the third volume of A Flickr of Humanity.

A Flickr of Humanity is the first project in the New Curators program, revisiting and reinterpreting The Family of Man, an exhibition held at MoMa in 1955. The exhibition showcased 503 photographs from 68 countries, celebrating universal aspects of the human experience. It was a declaration of solidarity following the Second World War.

For our third volume of A Flickr of Humanity we decided to explore the new world of generative AI using Microsoft Bing’s Image Creator to regenerate The Family of Man catalog (30th Anniversary Edition). The aim of the project was to investigate synthetic image generation to create a ‘companion publication’ to the original, and that will act as a timestamp, to showcase the state of generative AI in 2023.

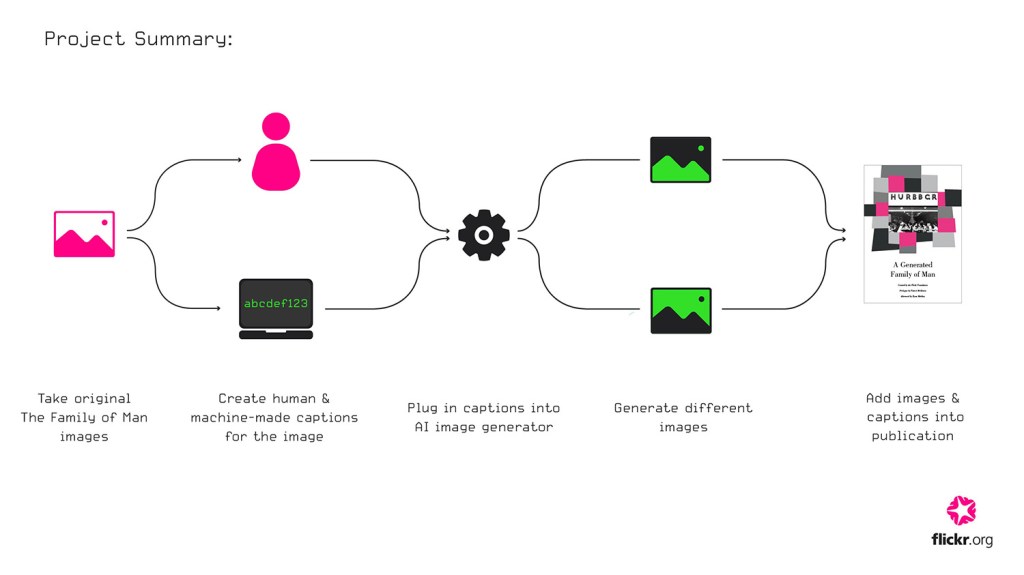

Project Summary

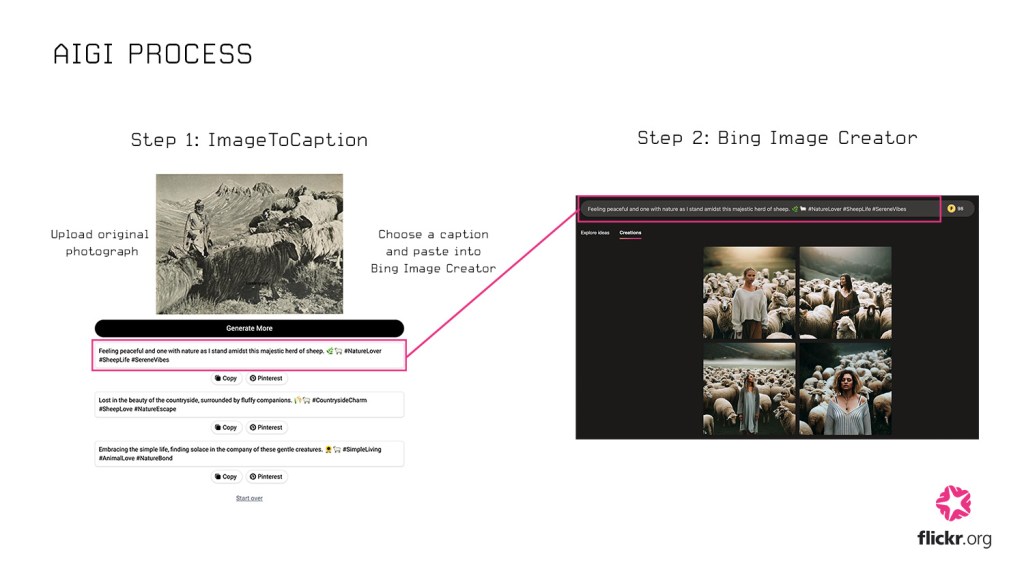

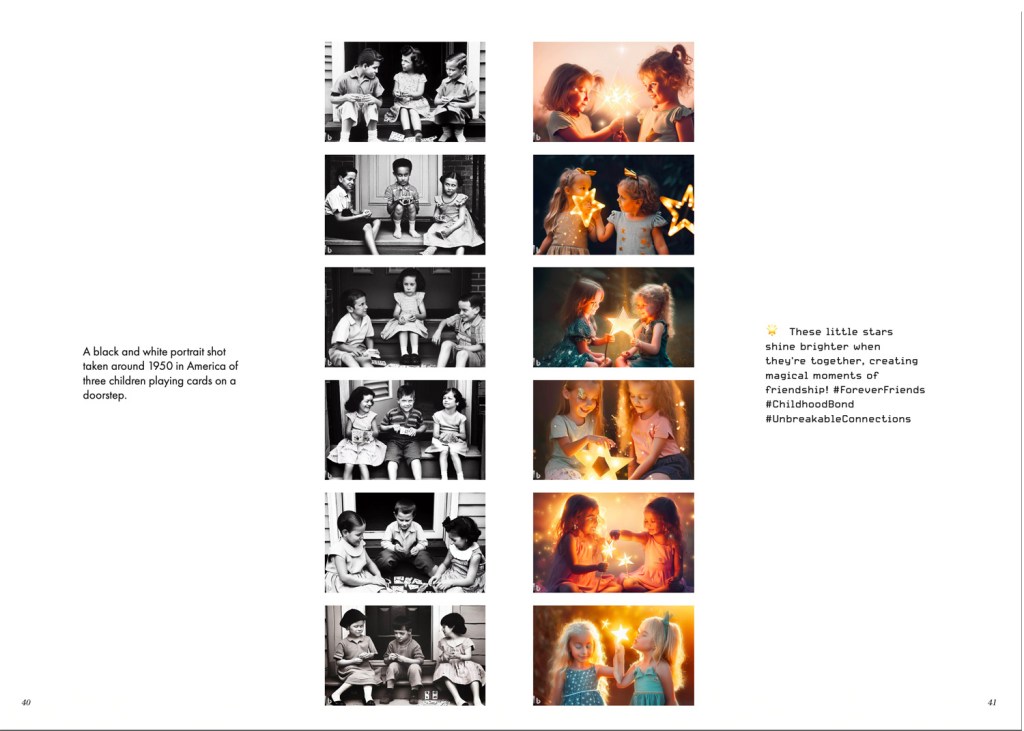

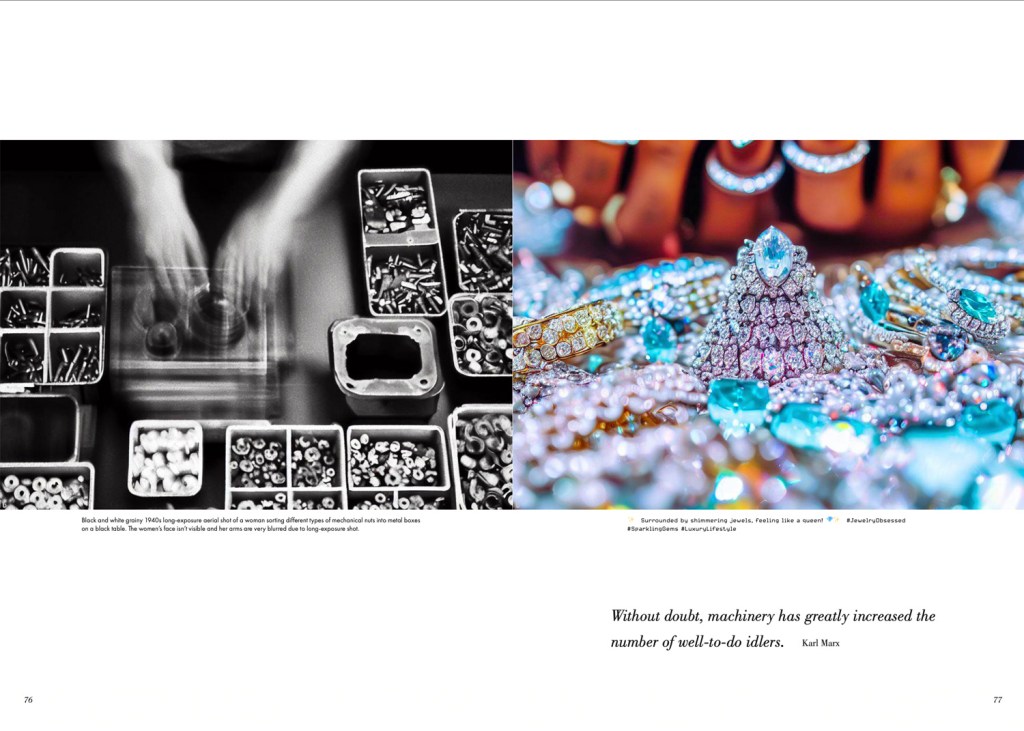

- We created new machine-generated versions of photographs from The Family of Man by writing a caption for each image and passing it through Microsoft Bing’s Image Creator. These images will be referred to as Human Mediated Images (HMI.)

- We fed screenshots of the original photographs into ImageToCaption, an AI-powered caption generator which produces cheesy Instagramesque captions, including emojis and hashtags. These computed captions were then passed into Bing’s Image Creator to generate an image only mediated by computers. These images will be referred to as AI-generated Images (AIGI).

We curated a selection of these generated images and captions into the new publication, A Generated Family of Man.

Image generation process

It is important to note that we decided to use free AI generators because we wanted to explore the most accessible generative AI.

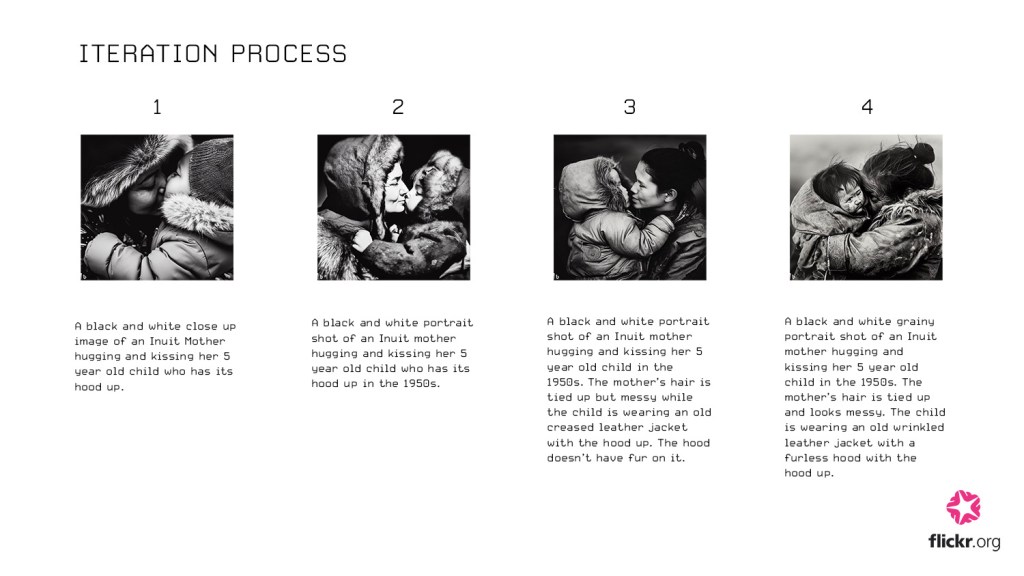

Generating images was time-consuming. In our early experiments, we generated several iterations of each photograph to try and get it as close to the original as possible. We’d vary the caption in each iteration to work towards a better attempt. We decided it would be more interesting to limit our caption refinements so we could see and show a less refined output. We decided to set a limit of two caption-writing iterations for the HMIs.

For the AIGIs we chose one caption from the three from the first set of generated responses. We’d use the selected caption to do one iteration of image generation, unless the caption was blocked, in which case we would pick another generated caption and try that.

Once we had a good sense of how much labour was required to generate these images, we set an initial target to generate half of the images in the original publication. The initial image generation process, in which we spawned roughly 250 of the original photographs took around 4 weeks. We then had roughly 500 generated images with (about half HMIs and half AIGIs), and we could begin the layout work.

Making the publication

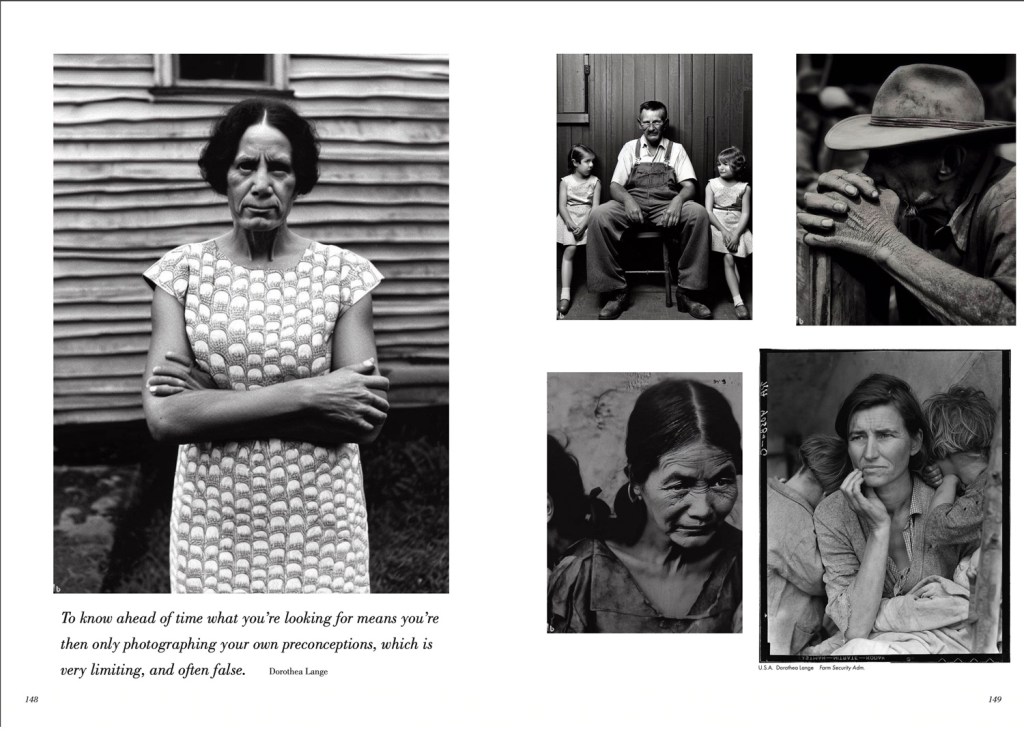

The majority of the photographs featured in The Family of Man are still in copyright so we were unable to feature the original photographs in our publication. That’s apart from the two Dorothea Lange photographs we decided to feature, and which have no known copyright.

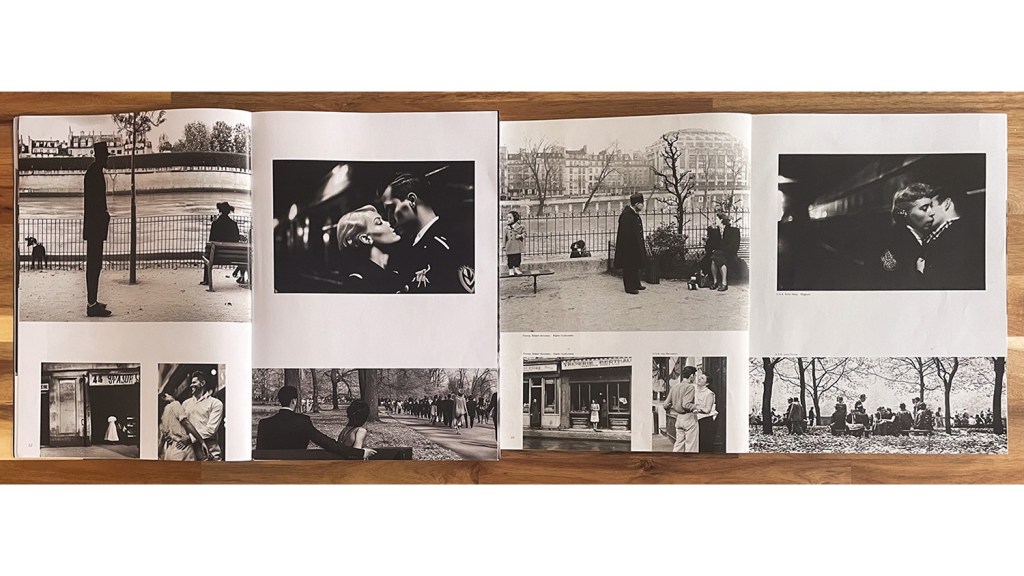

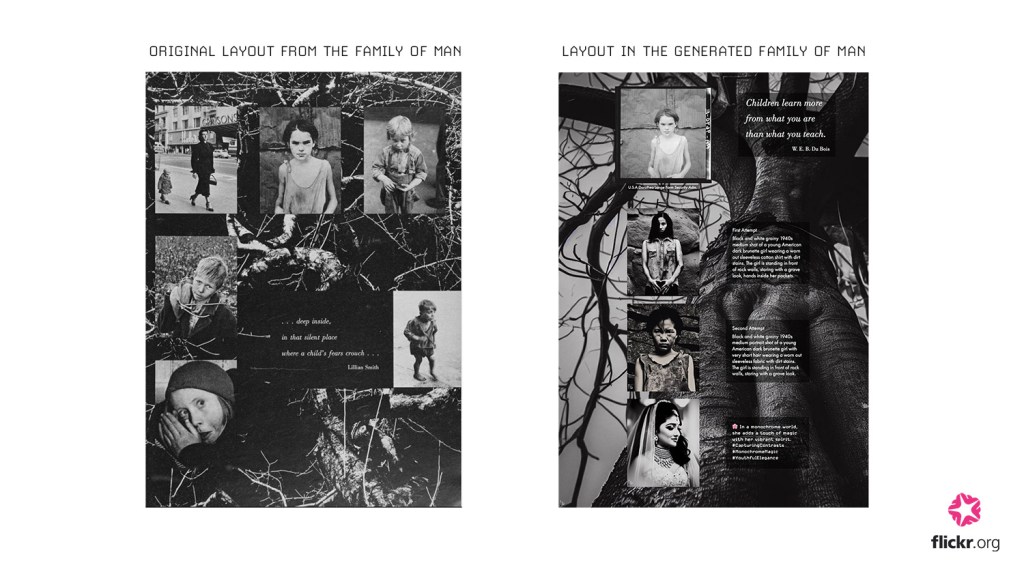

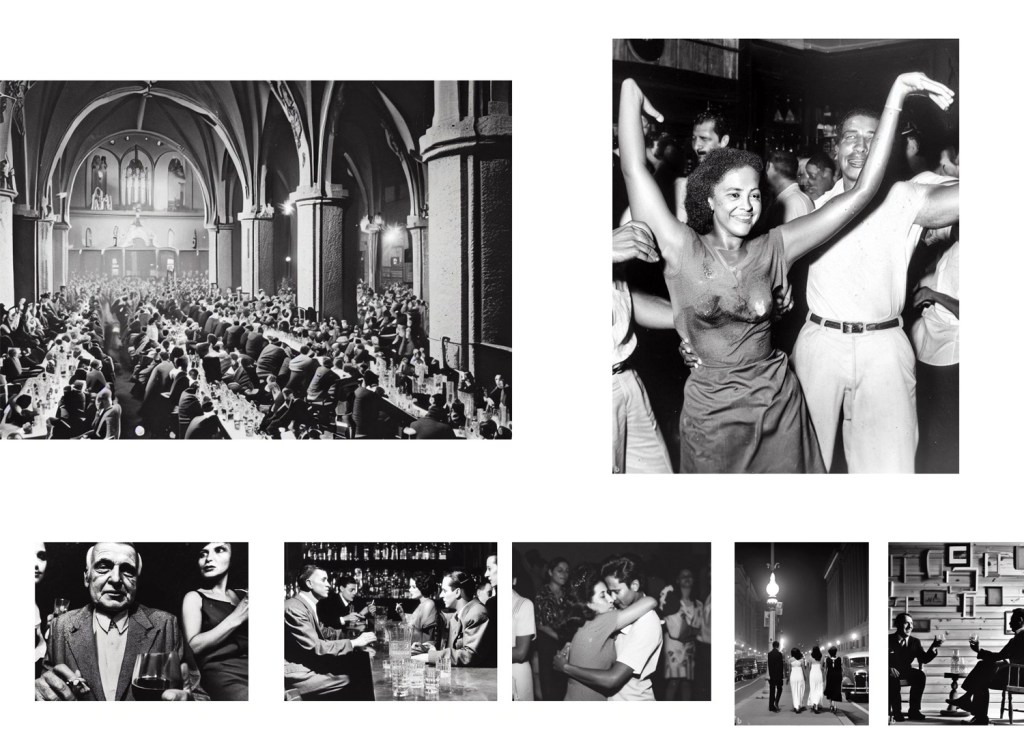

We decided to design the publication to act as a ‘companion publication’ to the original catalog. As we progressed making the layout, we imagined the ideal situation: the reader would have an original The Family of Man catalogue to hand to compare and contrast the original photographs and generated images side by side. With this in mind we designed the layout of the publication as an echo of the original, to streamline this kind of comparison.

It was important to demonstrate the distinctions between HMI and AIGI versions of the original images, so in some cases we shifted the layout to allow this.

Identifying HMIs and AIGIs

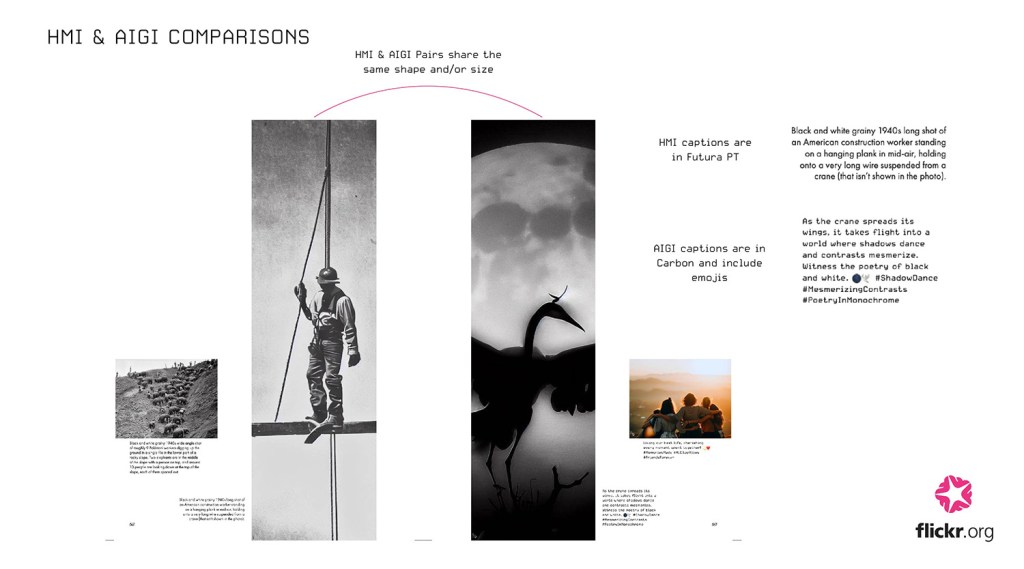

There was a lot of discussion around whether a reader would identify an image as an HMI or AIGI. All of the HMI images are black and white—because “black and white” and “grainy” were key human inputs in our captions to get the style right—while most of the AIGI images came out in colour. That in itself is an easy way to identify most of the images. We made the choice to use different typefaces on the captions too.

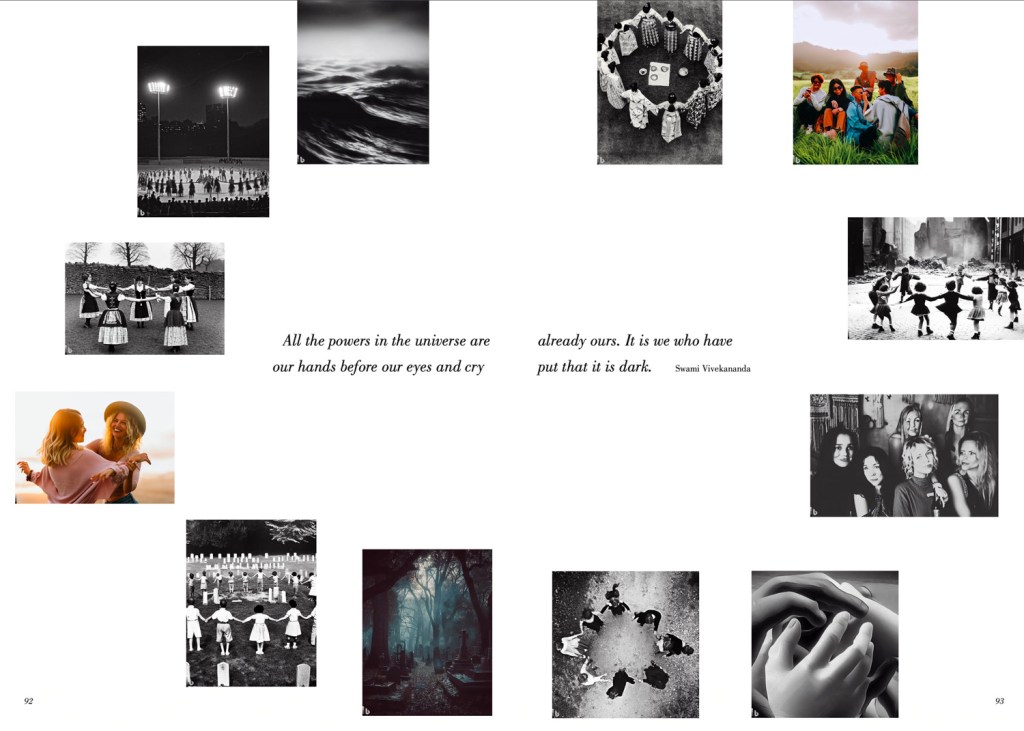

It is fascinating to compare the HMI and AIGI imagery, and we wanted to share that in the publication. So, in some cases, we’ve included both image types so readers can compare. Most of the image pairs can be identified because they share the same shape and size. All HMIs also sit on the left hand side of their paired AIGI.

In both cases we decided that a subtle approach might be more entertaining as it would leave it in the readers hands to interpret or guess which images are which.

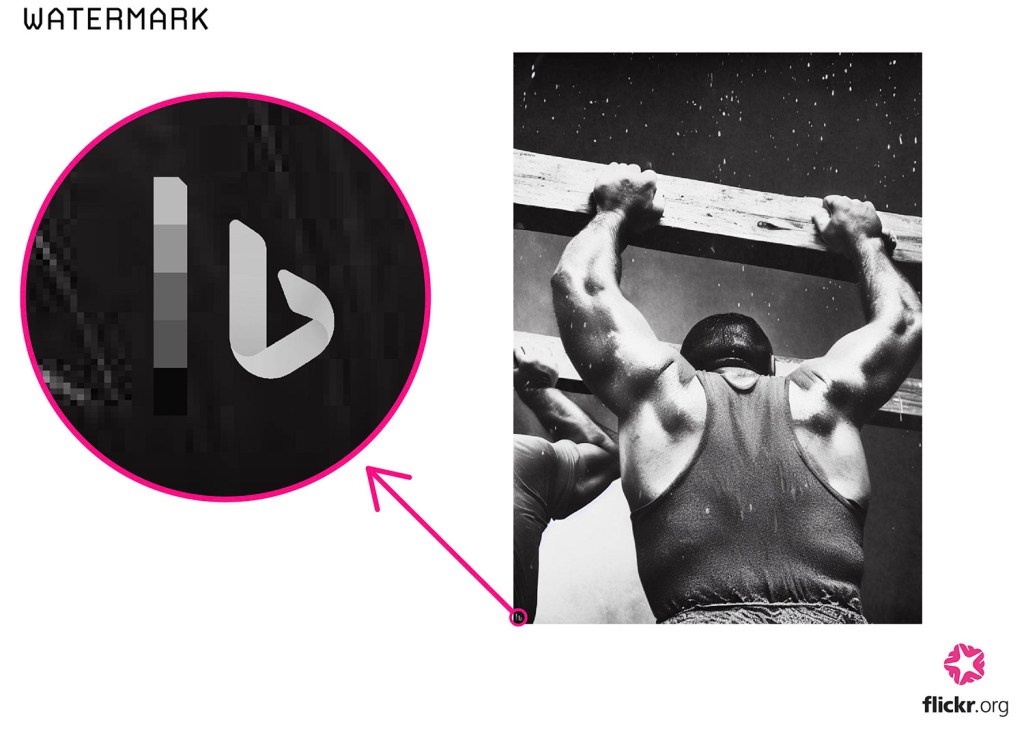

To watermark, or not to watermark?

Another issue that came up was around how to make it clear which images are AI-generated as there are a few images that are actual photographs. All AI images generated by Bing’s Image Creator come out with a watermark in the bottom left corner. As we made the layout, some of the original watermarks were cropped or moved out of the frame, so we decided to add the watermarks back into the AI-generated images in the bottom left corner so there is a way to identify which images are AI-generated.

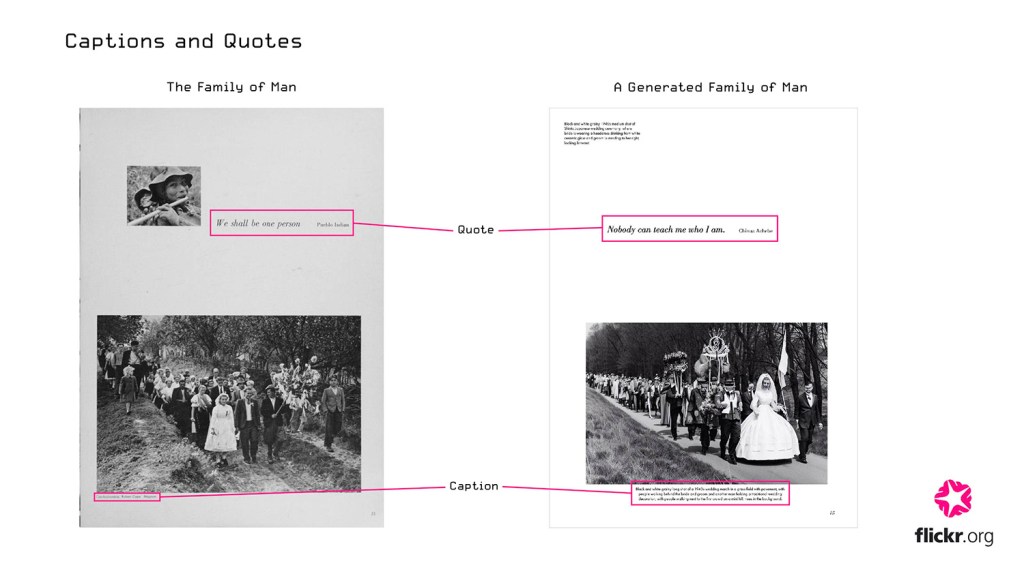

Captions and quotes

In the original The Family of Man catalog, each image has a caption to show the photographer’s name, the country the photograph was taken in, and any organizations the photograph is associated with. There are also quotes that are featured throughout the book.

For A Generated Family of Man we decided to use the same typefaces and font sizes as the original publication.

We decided to display the captions that were used to generate the images because we wanted to illustrate our inputs, and also those that were computer-generated. Our captions are much longer than the originals, so to prevent the pages from looking too cluttered, we added captions to a small selection of images. We decided to swap out the original quotes for quotes that are more relevant to the 21st century.

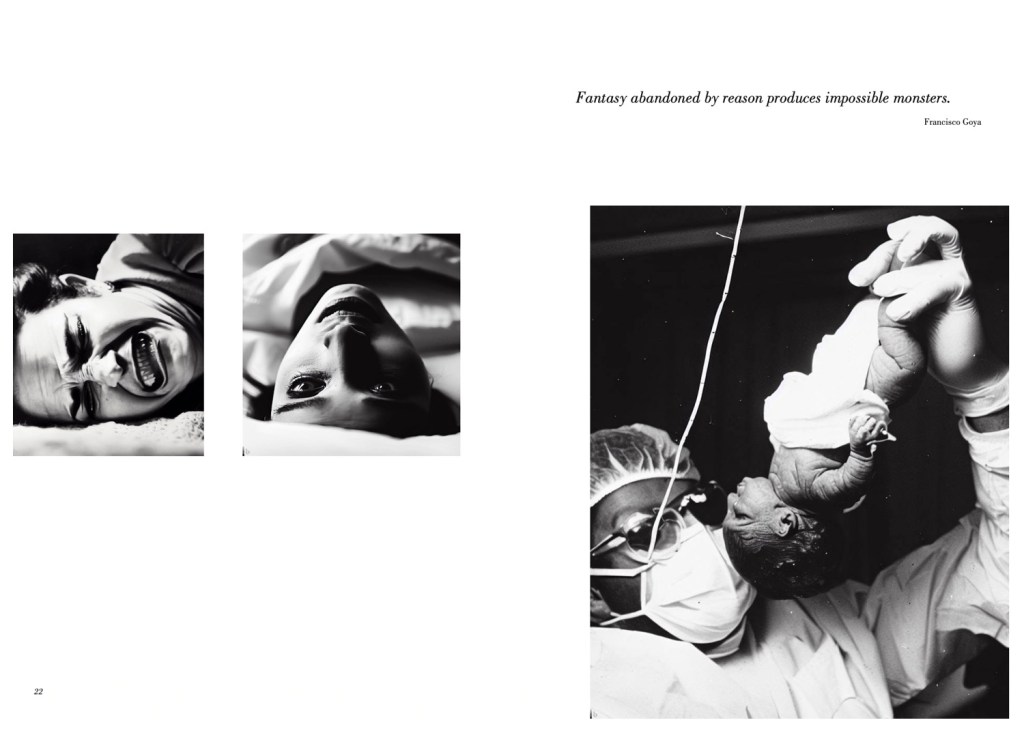

Below you can see some example pages from A Generated Family of Man.

Reflection

I had never really thought about AI that much before working on this project. I’ve spent weeks generating hundreds of images and I’ve gotten familiar with communicating with Bing’s Image Creator. I’ve been impressed by what it can do while being amused and often horrified by the weird humans it generates. It feels strange to be able to produce an image in a matter of seconds that is of such high quality, especially when we look at images that are not photo-realistic but done in an illustrative style. In ‘On AI-Generated Works, Artists, and Intellectual Property ‘, Ryan Merkley says ‘There is little doubt that these new tools will reshape economies, but who will benefit and who will be left out?’. As a designer it makes me feel a little worried about my future career as it feels almost inevitable, especially in a commercial setting, that AI will leave many visual designers redundant.

Generative AI is still in its infancy (Bing’s Image Creator was only announced and launched in late 2022!) and soon enough it will be capable of producing life-like images that are indistinguishable from the real thing. If it isn’t already. For this project we used Bing’s Image Creator, but it would be interesting to see how this project would turn out if we used another image generator such as MidJourney, which many consider to be at the top of its game.

There are bound to be many pros and cons to being able to generate flawless images and I am simultaneously excited and terrified to see what the future holds within the field of generative AI and AI technology at large.