Last year, we built Flickypedia, a new tool for copying photos from Flickr to Wikimedia Commons. As part of our planning, we asked for feedback on Flickr2Commons and analysed other tools. We spotted two consistent themes in the community’s responses:

- Write more structured data for Flickr photos

- Do a better job of detecting duplicate files

We tried to tackle both of these in Flickypedia, and initially, we were just trying to make our uploader better. Only later did we realize that we could take our work a lot further, and retroactively apply it to improve the metadata of the millions of Flickr photos already on Wikimedia Commons. At that moment, Flickypedia Backfillr Bot was born. Last week, the bot completed its millionth update, and we guesstimate we will be able to operate on another 13 million files.

The main goals of the Backfillr Bot are to improve the structured data for Flickr photos on Wikimedia Commons and to make it easier to find out which photos have been copied across. In this post, I’ll talk about what the bot does, and how it came to be.

Write more structured data for Flickr photos

There are two ways to add metadata to a file on Wikimedia Commons: by writing Wikitext or by creating structured data statements.

When you write Wikitext, you write your metadata in a MediaWiki-specific markup language that gets rendered as HTML. This markup can be written and edited by people, and the rendered HTML is designed to be read by people as well. Here’s a small example, which has some metadata to a file linking it back to the original Flickr photo:

== {{int:filedesc}} ==

{{Information

|Description={{en|1=Red-whiskered Bulbul photographed in Karnataka, India.}}

|Source=https://www.flickr.com/photos/shivanayak/12448637/

|Author=[[:en:User:Shivanayak|Shiva shankar]]

|Date=2005-05-04

|Permission=

|other_versions=

}}

and here’s what that Wikitext looks like when rendered as HTML:

This syntax is convenient for humans, but it’s fiddly for computers – it can be tricky to extract key information from Wikitext, especially when things get more complicated.

In 2017, Wikimedia Commons added support for structured data. This allows editors to add metadata in a machine-readable format. This makes it much easier to edit metadata programmatically, and there’s a strong desire from the community for new tools to write high-quality structured metadata that other tools can use.

When you add structured data to a file, you create “statements” which are attached to properties. The list of properties is chosen by the volunteers in the Wikimedia community.

For example, there’s a property called “source of file” which is used to indicate where a file came from. The file in our example has a single statement for this property, which says the file is available on the Internet, and points to the original Flickr URL:

Structured data is exposed via an API, and you can retrieve this information in nice machine-readable XML or JSON:

$ curl 'https://commons.wikimedia.org/w/api.php?action=wbgetentities&sites=commonswiki&titles=File%3ARed-whiskered%20Bulbul-web.jpg&format=xml'

<?xml version="1.0"?>

<api success="1">

…

<P7482>

…

<P973>

<_v snaktype="value" property="P973">

<datavalue

value="https://www.flickr.com/photos/shivanayak/12448637/"

type="string"/>

</_v>

</P973>

…

</P7482>

</api>

(Here “P7482” means “source of file” and “P973” is “described at URL”.)

Part of being a good structured data citizen is following the community’s established patterns for writing structured data. Ideally every tool would create statements in the same way, so the data is consistent across files – this makes it easier to work with later.

We spent a long time discussing how Flickypedia should use structured data, and we got a lot of helpful community feedback. We’ve documented our current data model as part of our Wikimedia project page.

Do a better job of detecting duplicate files

If a photo has already been copied from Flickr onto Wikimedia Commons, nobody wants to copy it a second time.

This sounds simple – just check whether the photo is already on Commons, and don’t offer to copy it if it’s already there. In practice, it’s quite tricky to tell if a given Flickr photo is on Commons. There are two big challenges:

- Files on Wikimedia Commons aren’t consistent in where they record the URL of the original Flickr photo. Newer files put the URL in structured data; older files only put the URL in Wikitext or the revision descriptions. You have to look in multiple places.

- Files on Wikimedia Commons aren’t consistent about which form of the Flickr URL they use – with and without a trailing slash, with the user NSID or their path alias, or the myriad other URL patterns that have been used in Flickr’s twenty-year history.

Here’s a sample of just some of the different URLs we saw in Wikimedia Commons:

https://www.flickr.com/photos/joyoflife//44627174

https://farm5.staticflickr.com/4586/37767087695_bb4ecff5f4_o.jpg

www.flickr.com/photo_edit.gne?id=3435827496

https://www.flickr.com/photo.gne?short=2ouuqFT

There’s no easy way to query Wikimedia Commons and see if a Flickr photo is already there. You can’t, for example, do a search for the current Flickr URL and be sure you’ll find a match – it wouldn’t find any of the examples above. You can combine various approaches that will improve your chances of finding an existing duplicate, if there is one, but it’s a lot of work and you get varying results.

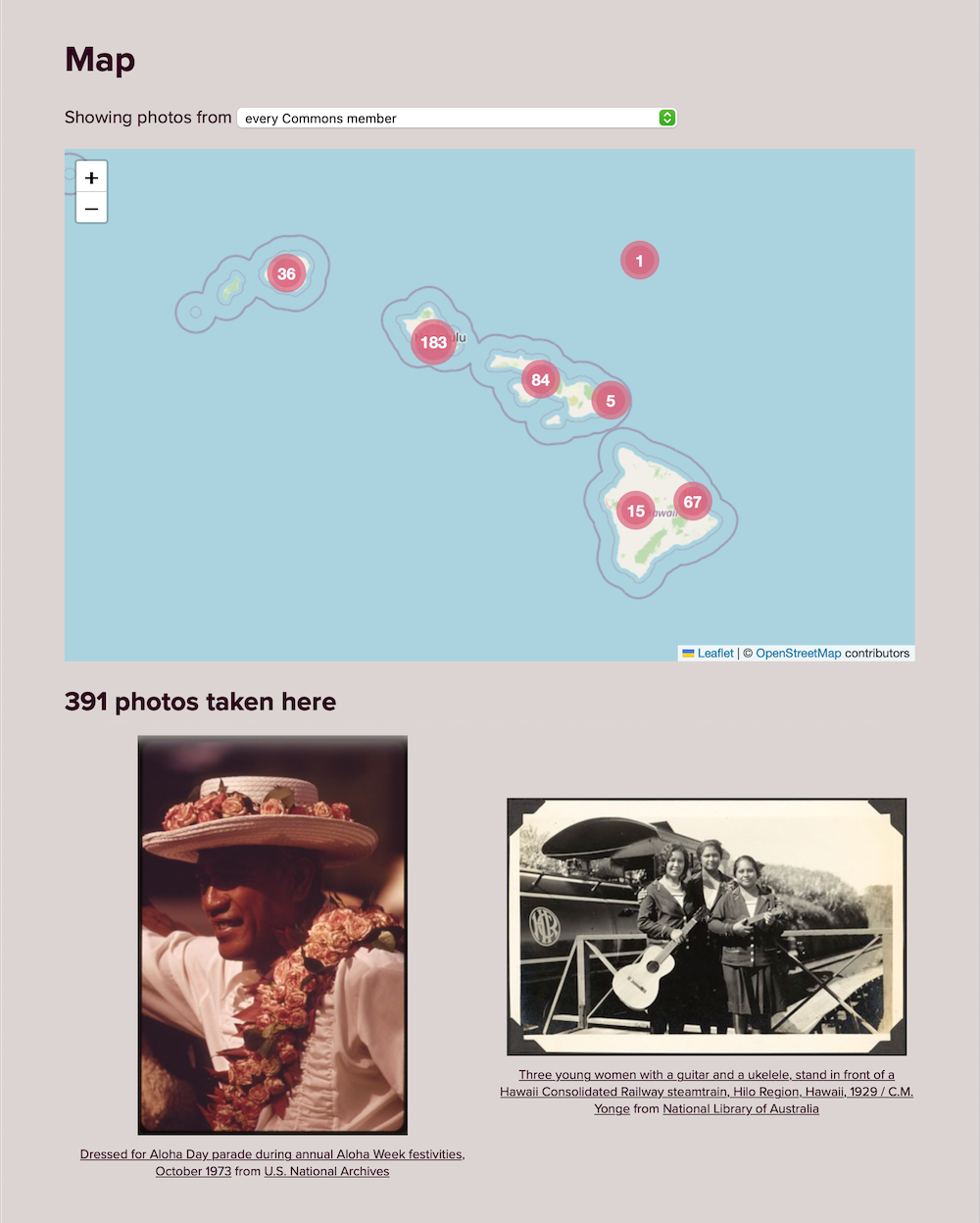

For the first version of Flickypedia, we took a different approach. We downloaded snapshots of the structured data for every file on Wikimedia Commons, and we built a database of all the links between files on Wikimedia Commons and Flickr photos. For every file in the snapshot, we looked at the structured data properties where we might find a Flickr URL. Then we tried to parse those URLs using our Flickr URL parsing library, and find out what Flickr photo they point at (if any).

This gave us a SQLite database that mapped Flickr photo IDs to Wikimedia Commons filenames. We could use this database to do fast queries to find copies of a Flickr photo that already exist on Commons. This proved the concept, but it had a couple of issues:

- It was an incomplete list – we only looked in the structured data, and not the Wikitext. We estimate we were missing at least a million photos.

- Nobody else can use this database; it only lives on the Flickypedia server. Theoretically somebody else could create it themselves – the snapshots are public, and the code is open source – but it seems unlikely.

- This database is only as up-to-date as the latest snapshot we’ve downloaded – it could easily fall behind what’s on Wikimedia Commons.

We wanted to make this process easier – both for ourselves, and anybody else building Flickr–Wikimedia Commons integrations.

Adding the Flickr Photo ID property

Every photo on Flickr has a unique numeric ID, so we proposed a new Flickr photo ID property to add to structured data on Wikimedia Commons. This proposal was discussed and accepted by the Wikimedia Commons community, and gives us a better way to match files on Wikimedia Commons to photos on Flickr:

This is a single field that you can query, and there’s an unambiguous, canonical way that values should be stored in this field – you don’t need to worry about the different variants of Flickr URL.

We added this field to Flickypedia, so any files uploaded with our tool will get this new field, and we hope that other Flickr upload tools will consider adding this field as well. But what about the millions of Flickr photos already on Wikimedia Commons? This is where Flickypedia Backfillr Bot was born.

Updating millions of files

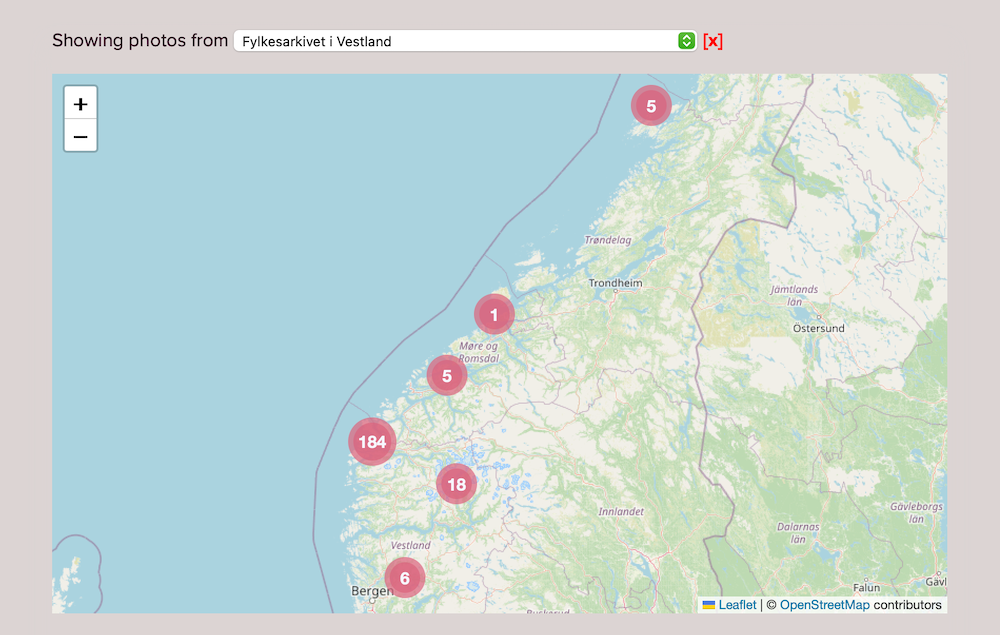

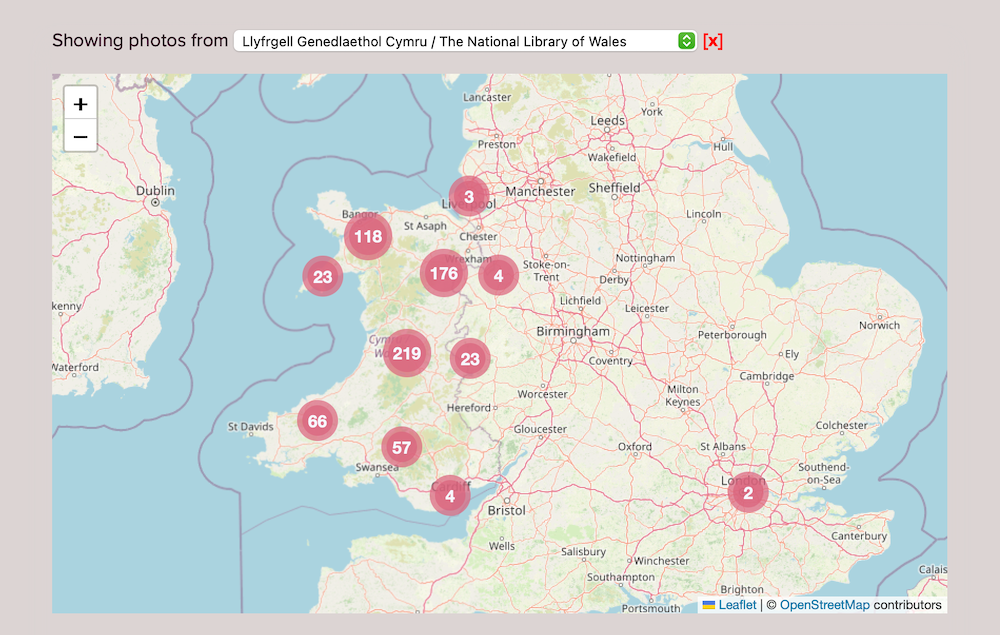

Flickypedia Backfillr Bot applies our structured data mapping to every Flickr photo it can find on Wikimedia Commons – whether or not it was uploaded with Flickypedia. For every photo which was copied from Flickr, it compares the structured data to the live Flickr metadata, and updates the structured data if the two don’t match. This includes the Flickr Photo ID.

It reuses code from our duplicate detector: it goes through a snapshot looking for any files that come from Flickr photos. Then it gets metadata from Flickr, checks if the structured data matches that metadata, and if not, it updates the file on Wikimedia Commons.

Here’s a brief sketch of the process:

Most of the time this logic is fairly straightforward, but occasionally the bot will get confused – this is when the bot wants to write a structured data statement, but there’s already a statement with a different value. In this case, the bot will do nothing and flag it for manual review. There are edge cases and unusual files in Wikimedia Commons, and it’s better for the bot to do nothing than write incorrect or misleading data that will need to be reverted later.

Here are two examples:

-

Sometimes Wikimedia Commons has more specific metadata than Flickr. For example, this Flickr photo was posted by the Donostia Kultura account, and the description identifies Leire Cano as the photographer.

Flickypedia Backfillr Bot wants to add a creator statement for “Donostia Kultura”, because it can’t understand the description – but when this file was copied to Wikimedia Commons, somebody added a more specific creator statement for “Leire Cano”.

The bot isn’t sure which statement is correct, so it does nothing and flags this for manual review – and in this case, we’ve left the existing statement as-is.

-

Sometimes existing data on Wikimedia Commons has been mapped incorrectly. For example, this Flickr photo was taken “circa 1943”, but when it was copied to Wikimedia Commons somebody added an overly precise “date taken” statement claiming it was taken on “1 Jan 1943”.

This bug probably occurred because of a misunderstanding of the Flickr API. The Flickr API will always return a complete timestamp in the “date” field, and then return a separate granularity value telling you how accurate it is. If you ignored that granularity value, you’d create an incorrect statement of what the date is.

The bot isn’t sure which statement is correct, so it does nothing and flags this for manual review – and in this case, we made a manual edit to replace the statement with the correct date.

What next?

We’re going to keep going! There were a few teething problems when we started running the bot, but the Wikimedia community helped us fix our mistakes. It’s now been running for a month or so, and processed over a million files.

All the Flickypedia code is open source on GitHub, and a lot of it isn’t specific to Flickr – it’s general-purpose code for working with structured data on Wikimedia Commons, and could be adapted to build similar bots. We’ve already had conversations with a few people about other use cases, and we’ve got some sketches for how that code could be extracted into a standalone library.

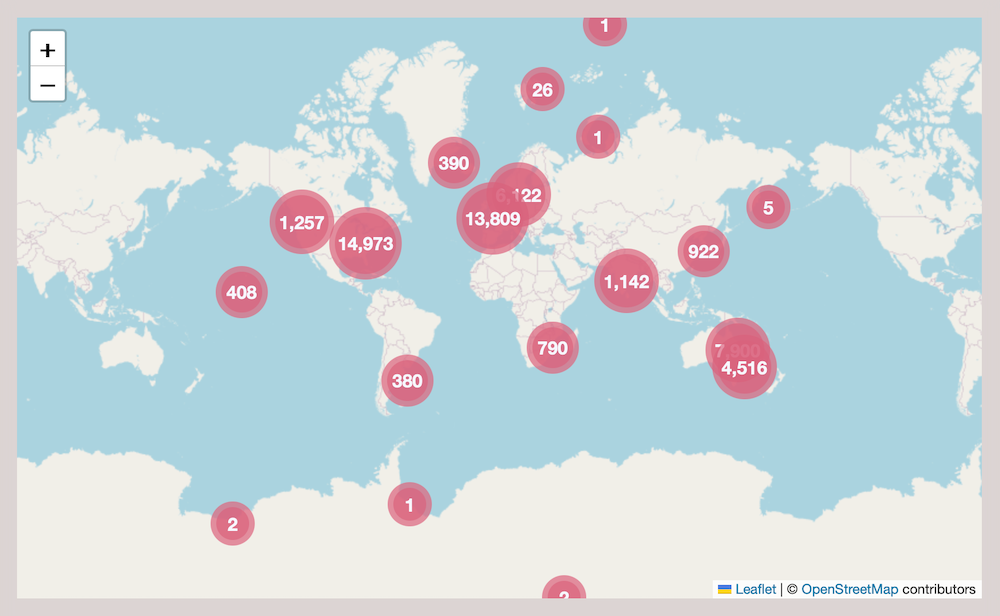

We estimate that at least 14 million files on Wikimedia Commons are photos that were originally uploaded to Flickr – more than 10% of all the files on Commons. There’s plenty more to do. Onwards and upwards!